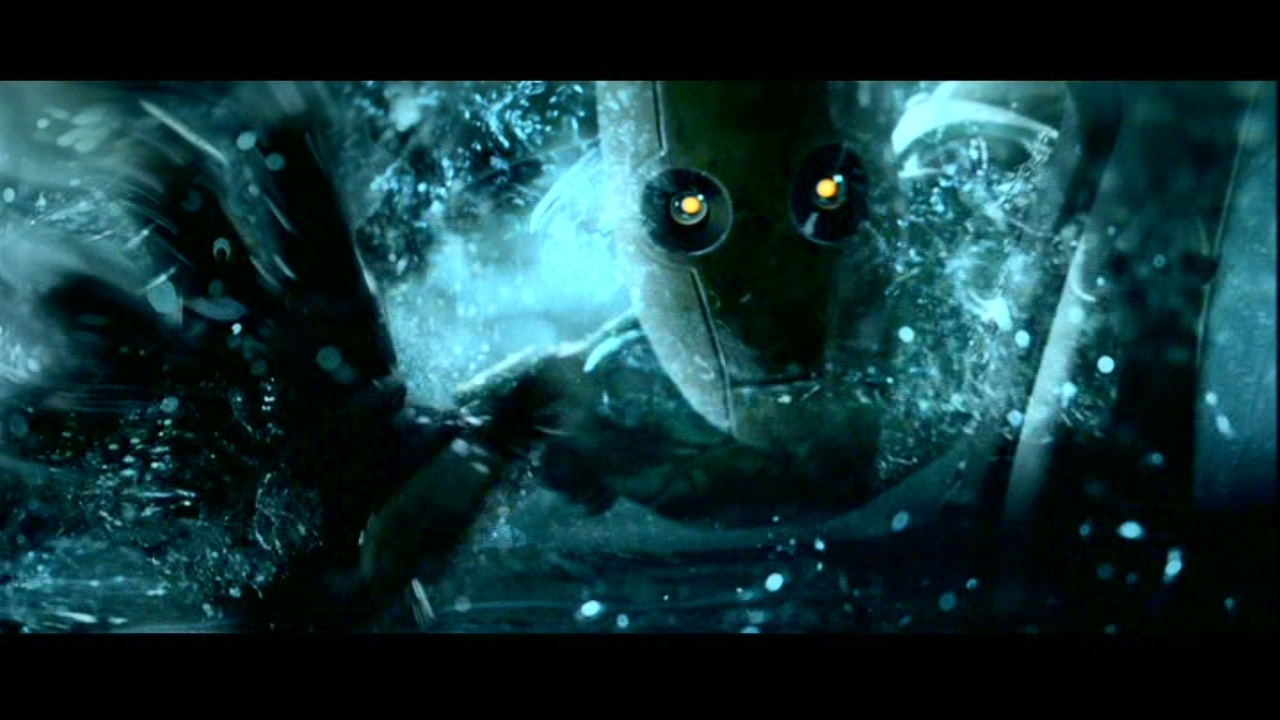

In this flashback scene from the movie “I, Robot” the AI has to decide whom to save.

We first wrestled with the question of artificial intelligence, or AI for short, back in the spring of 2018. In that conversation we wondered about how to teach AI to have a moral compass, and what system or systems of morality should be our starting point.

Since then, such questions about AI, especially with the further development of autonomous vehicles, have only arisen more frequently. One common way some of these issue have been debated is through the classic ethical philosophy dilemma known as “the trolley problem.” Here is how this is typically presented:

Imagine you’re driving a trolley car. Suddenly the brakes fail, and on the track ahead of you are five workers you’ll run over. Now, you can steer onto another track, but on that track is one person who you will kill instead of the five: It’s the difference between unintentionally killing five people versus intentionally killing one. What should you do?

In our case, substitute you, the driver, for the AI controlling your self-driving car. And to complicate things further, let’s give the AI more choices: Now it could crash into a crowd of pedestrians, or into someone’s grandma (or a mom pushing a stroller), or off the road entirely sparing the strangers but killing you. In short, someone, or someones, are going to die in this crash, and the AI has to decide. So who is it?

To be fair, critics have argued that the trolley problem is an inappropriate benchmark. As Simon Beard points out at Quartz (you can read the article by clicking here):

As the trolley driver, you are not responsible for the failure of the brakes or the presence of the workers on the track, so doing nothing means the unintentional death of five people. However, if we choose to intervene and switch tracks, then we intentionally kill one person as a means of saving the other five.

This philosophical issue is irrelevant to self-driving cars because they don’t have intentions.

Which means the decision the AI makes will be a reflection not of its intention, but ours as its creators and programmers. There are other problems too, as the article points out, but this is the gist of it. How should an artificial intelligence make decisions that we, ourselves, find so difficult to wrestle with? As Beard himself puts it:

If we want to make ethical AI, we should be harnessing this ability and training self-driving cars to work in ways that we, as a society, can approve of and endorse. That is very unlikely to result from starting with philosophical first principles and working from there. Either philosophers of AI ethics like myself have to discard a lot of our theoretical baggage, or others should take on this work instead.

Join us for the conversation on Tuesday evening, Oct. 22, beginning at 7 pm at Homegrown Brewing Company on Lapeer Road in downtown Oxford.

And for another take on the ethics of AI you can check out this article here, which also delves into the ethical question of workers losing their jobs to automation, a topic we discussed a few weeks back.